"Progress"?

The idea that technological growth has accelerated to the point where we're hurtling toward some sort of singularity is debatable. There is certainly a case to be made that our generation has in fact seen less technological progress than our parents or even grandparents did. Mum and Dad witnessed man walk on the moon, but humanity has little to show for it. We are reminded of new bio and nano technologies, but how widespread an impact have they had on day-to-day human life? Despite 'breakthroughs' in medical science, morbidity and mortality from common afflictions such as cancer continue unabated, even in developed countries.

"The pace of change has actually, generation by generation, been slowing down...The World of today is not as different from the World of 1959 as the World of 1959 was from 1909" - Paul Krugman (Nobel Economics Laureate), speaking at Worldcon, 2009Consider someone born at the end of the nineteenth-century, dying somewhere in the middle of the twentieth-century. What wonders of technological progress did they witness? The introduction of electric light and telephones, cars and airplanes, the atomic bomb and nuclear power, vacuum electronics, semiconductor electronics, plastics, the computer, most vaccines and antibiotics and the grandaddy of human achievement thus far, manned space flight. In 1903 for example the Wright Brothers achieved man's first flight. Less than 60 years later, Yuri Gagarin was in space. In human terms these achievements, in such a short space of time, are vast. Child mortality was also drastically cut up to 80% during the period.

|

| Click to Enlarge |

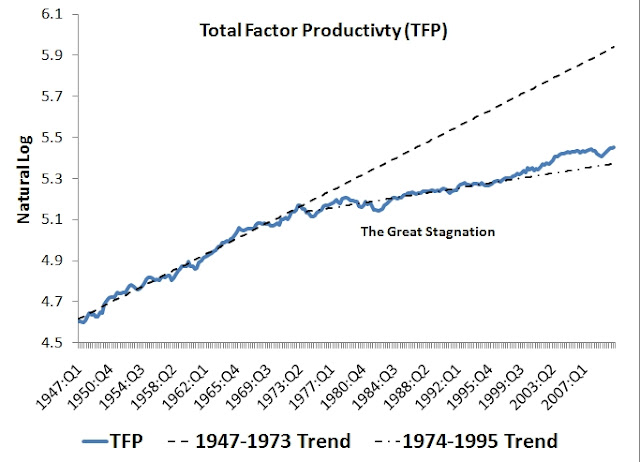

What is often portrayed on graphs like the one above is a line that has gradually inclined. If you were to take one that started somewhere around 1750 the incline would be even sharper, showing a sudden shoot upward. But this in itself isn't perhaps quite as surprising when you consider what was happening in the middle of the eighteenth-century. A new economic system predicated on 'growth' was born. New markets emerged and an increasingly finer division of labour resulted in technological progress. The problem is that this is still the view taken today, a simple-minded extrapolation of an existing pattern. However, where the industrial revolution ushered in new markets and genuine growth, what we actually have now is a tinkering of existing markets, not new 'progress'. What's arguably changing is merely the role of expertise in an environment where it's increasingly difficult to decide who to trust. The technically 'illiterate' put immense trust in 'experts'. When a scientist says "progress is being made", everybody else jumps, including specialists in unrelated fields. That is not to say progress isn't being made, but that such statements give an illusion of grandeur unbecoming of the reality.

The doubling of computer power every twelve months in the last thirty-years (as described by Moore's Law) is usually the most common example used to indicate 'progress'. However, this does not mean that we are in an age of accelerated technological development. Moore's Law was successful in predicting the development of semiconductors and computing power partly because it was adopted as a road map by the industry which spent vast sums on R&D to meet requirements. Just because the curve indicating progress is continuously inclining that is not to say that Moore's Law hasn't any physical limitations and that the upward trend is indefinite. There are significant rumblings in the industry that signs show this unbridled, exponential growth in computing power may soon be coming to an end. Professor Steven Furber at the University of Manchester for example says that processing speeds are starting to reach their peak. According to Furber, who designed the ARM processor for Acorn Computers in the 1980s, it is becoming increasingly economically unviable to have transistors which are 100 to 150 atoms across as designing such chips takes hundreds of man-hours. Furber predicts that within the next ten-years the doubling of processor speeds every year will stop. Where the progress in computing appears to be reaching its maturation, it remains to be seen whether Moore's curve can be applied to other industries, be it nano-technology, bio-technology or the cognitive sciences.

The doubling of computer power every twelve months in the last thirty-years (as described by Moore's Law) is usually the most common example used to indicate 'progress'. However, this does not mean that we are in an age of accelerated technological development. Moore's Law was successful in predicting the development of semiconductors and computing power partly because it was adopted as a road map by the industry which spent vast sums on R&D to meet requirements. Just because the curve indicating progress is continuously inclining that is not to say that Moore's Law hasn't any physical limitations and that the upward trend is indefinite. There are significant rumblings in the industry that signs show this unbridled, exponential growth in computing power may soon be coming to an end. Professor Steven Furber at the University of Manchester for example says that processing speeds are starting to reach their peak. According to Furber, who designed the ARM processor for Acorn Computers in the 1980s, it is becoming increasingly economically unviable to have transistors which are 100 to 150 atoms across as designing such chips takes hundreds of man-hours. Furber predicts that within the next ten-years the doubling of processor speeds every year will stop. Where the progress in computing appears to be reaching its maturation, it remains to be seen whether Moore's curve can be applied to other industries, be it nano-technology, bio-technology or the cognitive sciences.

What it boils down to is a matter of perspective; "Singularitarian" optimists claim that the impact of innovation on our lives is only increasing and extrapolate that such 'progress' is indefinite into the future. The question is, however, whether any of these innovations in our life-time have really had as great an impact on our lives as say the airplane or the car? Indeed, it is difficult to point to any invention as revolutionary in our own time as was in the past. Consider for a moment the development of propulsion technologies. It took one hundred and fifty-years to get from the external combustion engine to internal combustion. From internal combustion to the turbine engine, it took fifty-years, and then from the turbine engine to rocket engine, fifteen-years. What since? That in itself should indicate a slowdown. It's been some sixty-years since the last major advance in propulsion technology when the last breakthrough came only fifteen-years after the previous. If that is not stagnation then what is?

Generally, technological progress when plotted on a graph follows an S-curve, marking a slow early rise and a quasi-exponential phase, ultimately resulting in a slow saturation into wider public use.

Broadly speaking, the asymptotic limits of one technology invariably kick-off the development of a similar pattern in the growth of other related technologies. This is all very well and good, but the question is whether we are doing enough to push these boundaries. We are nowhere near the limits of imposed physics and the more worrying prospect is that there are no signs that we have much intention of going further into new fields. As physicist Richard Feynman succinctly put it in 1959, "there's plenty of room at the bottom". This is as true today, despite the fulfilled prophecies of Moore's Law in the past few decades.

The other points raised when attempting to measure how much progress we've had in the last forty-years or so come in the form of several questions. By what metric does one use to measure change? What is actually needed? What do these changes really signify? What have they actually 'done'? Have we only gained more luxury frivolities, gadgets and gimmicks or genuine life improvements? Indeed, what is progress? Of course, with things such as the internet and widespread mobile phone use society operates much differently to than it did twenty, even ten-years ago. Naturally the internet will be cited as the pinnacle of human progress in the last twenty-years, but how many internet-like events have we had in this time period compared to the number of breakthroughs in the period before? We still use the microwave, the freezer, cooktop, oven, combustion engine and steam from power plants to generate electricity to name but a few innovations that have been with us for the better part of the last century. Computers are faster, and we have flatscreens now, but has anything 'huge' happened? We don't exactly have anti-gravity, teleportation or medicine able to grow new bodies like imagined fifty or sixty years ago. Sure, things might be 'better', but are they revolutionary? Indeed, what genuine newfangled technology is out there? Or is 'progress' really just iterations upon iterations?

Technology now seems to be more evolutionary than revolutionary, with the exception of the internet. If one was to be ultra cynical about the state of science, you might wager that 'high science' is now more concerned with how the bristles of a brush are more efficient at sweeping household dust than the brooms of old. Technology thus hasn't revolutionised the fundamental way we do things, except communicate. You still have to get up, brush your teeth, have a shower, drive to work, etc.

The Case For Progress

Of course, this is not the whole story. The problem with looking at this on a linear scale shows the idea that 'big' inventions happened between 1830 and 1960. What you have to remember is that these inventions took decades to reach maturity whereas current inventions now take less time to make an impact thanks to their ancillaries before them. People often overlook the initial struggle to get new technology into production where it can finally benefit the people. Thus, it does not necessarily mean we have reached the pinnacle of development and all that's left to do is 'tweak'.

The way we exchange information has increased exponentially to the point where information technology has helped accelerate overall progress. Agriculture is another area where humans have made huge strides, especially recently. In 1880 around 49% of the entire world's labour force was involved in agriculture. As recently as 1970, the percentage of agriculturists in the developed world was as high as 20%. Now it is only 2.5%. In underdeveloped regions it was something closer to two-thirds, whereas now it is about 43.5%. From a global perspective there is certainly an argument for progress. The worldwide access to smart phones and communications is unprecedented. Just because Western life doesn't appear to have changed all too much, consider the rest of the world which is experiencing swift changes from farm-to-iPhone. An exponential growth in population has also contributed more 'brains', and coupled with global communications can only mark a surge in development. More connected people means more shared knowledge and thus new innovations. Or so the theory goes.

For the first time humanity is at a stage it has never found itself in before. We have to worry far less about survival than in the past, free to work on the more complex problems of the universe. Cancer treatments are better, survival rates are up, as is life expectancy. On space travel it is not all gloom either. Consider the Mars rovers, which wouldn't last the years they currently do with sixties technology. We also have long-term orbiters around Saturn and other worlds. Indeed, there is a permanent human presence in space. There are also holes in the argument that the clear benefits of innovation in the period 1880-1970 are more tangible because of the correlation with falling mortality data. In statistics it is possible to show correlations with almost anything. In reality, falling mortality has had less to do with the introduction of nuclear power, electricity and antibiotics than you might think. The reason for the sharp drop in death rates was more to do with modern plumbing and sewer systems which effectively separated waste from drinking water - a much simpler and more common-sense based implementation than the complexities involved in splitting the atom. Indeed, widespread use of antibiotics only occurred in the mid-twentieth century, after the 'bulk' decrease in mortality had already occurred.

No doubt, the period 1880-1970 saw the world change dramatically. The problems lie in whether the period since has been just as dramatic. Perhaps there is an argument to make that this current generation is so used to change that we don't even notice it. Moore's Law was forwarded in the seventies, and yet technology arguably continues to push forward. Indeed, technological leaps remain a matter of perspective. For example, Einstein merely refined Newton's approach where he was already close to 'reality'. Humanity is constantly refining and drawing closer to the "truth". The leaps may seem smaller, but now they are more numerous.

Just because one might not be directly benefitting from nano and bio technology, does not mean that there is no scientific progress in general and that these are not having a huge impact on our understanding. You could easily make the same case about the A-Bomb and fission power. From our grandparent's perspectives, how revolutionary did they seem at the time? Also consider the possibility that maybe we have done most of the 'obvious' 'big' stuff, even if it has yet to be fully deployed. The focus of R&D these days appears to have shifted from 'big' 'obvious' things like engines, televisions and radios to more finicky, small, non-consumer items like nano tech, bio tech, medical tech, robotics and new materials. Advances in these new fields of science are reported almost daily if one was to pay enough attention. It merely seems such things appear to be off your 'average' person's radar. One way to view the perceived slow-down is with the S-curve. While on the one hand we may well be reaching the maturation of several fields of science, we are now entering new fields of endeavour whose impact is yet to be seen, much like the way radio was at the turn of the twentieth-century.

Of course, the elephant in the room is the internet. This has been the one true revolutionary breakthrough in the last forty-years, and may well prove to be one of the greatest in mankind's history. There is no understating how much of a revolution it truly is. Further, the World-Wide-Web is not just a single 'point' on the technological timeline. That would be like saying the same for electricity, and ignoring every subsequent electrical invention because it's already covered. The internet itself has created a new landscape in which data lives changing how people work, live and play. Just because it marks a point on the singular line of progress, it doesn't mean that its offspring are 'not new'.

Science-Fiction

For all the advanced manufacturing techniques the industrial revolution brought to the world, one of the biggest impacts it had was that on culture, particularly noticeable with the new genre of science-fiction novels. Science fiction from the 50s and 60s in particular taught us that we should have flying cars and lunar colonies by the end of the twentieth century. There is most definitely a case to be made that the genre sparked imaginations, and perhaps even gave an entire generation an unrealistic hope for the future. Indeed, how should we expect to have advanced if it were not for science fiction?

Futurism and science fiction were game changing products of industrialization. It offered new thought where no longer would religion and philosophy shape mankind's future; technology would usurp these outmoded constructs where science and reason would guide humanity. The reality however is that these depictions of a glorious future have perhaps inflated expectations when you cannot realistically have ever predicted what stage of development we 'should' be at now. There is no way of knowing what now will turn out to be a 'significant' invention. It might seem progress is 'slow' because for the first time we have the ability to document and share every little step we take with the rest of the world. The problem with making statements such as 'technological progress has slowed down' is that it only takes into account a short period of time, negating the need for a more long-term view. Indeed, while the 'scenic impact' of current progress may be lower, it does not mean it has halted altogether. While our current generation might not have seen the advent of the automobile, we did see the PC, which is now far more capable than any pre-1995 sci-fi computer ever imagined.

Conclusions

The answer as to whether technological progress has slowed down varies on how you measure technical progress. Some of it even comes down to how much it matters to you personally. For example, in 1989 major data centres had whole rooms filled with dishwasher-sized gigabyte drive units. A whole room had a total capacity of 600GB. These were multimillion dollar facilities. Now you can walk into a shop, get a 16GB USB drive the size of your thumb while you've got a 1TB harddrive sitting in your personal computer at home. That is almost double the entire capacity of that room from 1989 in the palm of your hand. And all for under £100. On one level that's incredible, but some might easily discard it as the same only smaller.

The difference in these perceptions is what you class a transformative breakthrough technology and a progressive refinement of existing technology. Depending on the view, it can either be interpreted as 'progress' or 'slowdown'. Gary Kasparov, the Russian chess grandmaster, maintains that the last truly "revolutionary technology was the Apple II", released in 1977. As far as he sees it, innovation is slowly grinding to a halt, both in the public and private spheres. Indeed, there's certainly a case to be made for a 'culture of optimization'.

Broadly speaking, the asymptotic limits of one technology invariably kick-off the development of a similar pattern in the growth of other related technologies. This is all very well and good, but the question is whether we are doing enough to push these boundaries. We are nowhere near the limits of imposed physics and the more worrying prospect is that there are no signs that we have much intention of going further into new fields. As physicist Richard Feynman succinctly put it in 1959, "there's plenty of room at the bottom". This is as true today, despite the fulfilled prophecies of Moore's Law in the past few decades.

The other points raised when attempting to measure how much progress we've had in the last forty-years or so come in the form of several questions. By what metric does one use to measure change? What is actually needed? What do these changes really signify? What have they actually 'done'? Have we only gained more luxury frivolities, gadgets and gimmicks or genuine life improvements? Indeed, what is progress? Of course, with things such as the internet and widespread mobile phone use society operates much differently to than it did twenty, even ten-years ago. Naturally the internet will be cited as the pinnacle of human progress in the last twenty-years, but how many internet-like events have we had in this time period compared to the number of breakthroughs in the period before? We still use the microwave, the freezer, cooktop, oven, combustion engine and steam from power plants to generate electricity to name but a few innovations that have been with us for the better part of the last century. Computers are faster, and we have flatscreens now, but has anything 'huge' happened? We don't exactly have anti-gravity, teleportation or medicine able to grow new bodies like imagined fifty or sixty years ago. Sure, things might be 'better', but are they revolutionary? Indeed, what genuine newfangled technology is out there? Or is 'progress' really just iterations upon iterations?

Technology now seems to be more evolutionary than revolutionary, with the exception of the internet. If one was to be ultra cynical about the state of science, you might wager that 'high science' is now more concerned with how the bristles of a brush are more efficient at sweeping household dust than the brooms of old. Technology thus hasn't revolutionised the fundamental way we do things, except communicate. You still have to get up, brush your teeth, have a shower, drive to work, etc.

What's Really Changed?

The difficulty comes in pointing to something as dramatic as splitting the atom in the last forty-years. When there's nothing patently obvious one begins to wonder whether the speed of innovation is decreasing. Indeed, are we merely making things smaller and faster? Faster computers are all very nice, but are they really symbols of change? If you were to point to faster computers as your example then you would merely be citing an example of an innovation on an existing theme, not an invention. Let's consider computers for a moment. Yes, they've got smaller and faster. However, all current computers are merely re-imaginations of PDP 11 architecture developed in the seventies with minor improvements. Likewise, the iPhone, the pinnacle of technology available to the average consumer, is just a smaller version of the Memex forwarded by Vannevar Bush around 1945.

No doubt the complexities of 'old' technology have certainly increased, but the basic premise of their function is still the same as fifty-years ago. Even though on one level it is somewhat astounding to compare todays cars to those way back when, can you really sit there and say that because now they have seat-warmers this fundamentally indicates progress? While consumer electronics, medicine, computers and communications have all gotten smaller in the last forty-years, the cultural and social change has been less pronounced. We still lead an automobile-centric, consumer-based, culturally egalitarian lifestyle that would be very recognisable to anyone from the 1950s. We've had reactors and rockets for generations yet we still wallow in our own filth, murdering each other for the oil that poisons our environment. Likewise, the physical structure of our cities hasn't changed either. Infrastructure is pretty much the same, in large part due to the enormous cost of replacing it all. For example, updating our power plants and replacing tracks for maglev rail networks would be an immense undertaking costing hundreds-of-billions. Robotic and molecular manufacturing techniques have the potential to immeasurably improve efficiency at a fraction of the cost, but for now we're not quite there yet.

The only thing that has been arguably significant is the internet. But even then, it is arguable that gross progress has slowed. Sure, 1959 had no internet, PCs, mobile phones - but the broad features; general media, post-industrial service economy, mass transport, power grid and military were pretty similar. True technological breakthroughs have the power to fundamentally reshape societies. For example, think for a second how a clean, infinite energy technology would impact the entire world. Consider the US federal budget for 2010 - some $3.5trillion. Less than a third of a percentage - $5.1billion - was allocated to Energy R&D. In the private sector, energy firms collectively allocated 0.3% of all sales to R&D. In other words, pittance. Think what we might be capable of should proper resources be invested in replacing the internal combustion engine, a machine dating back to the mid 1800s. New sources of energy would not only be cleaner and weaken oil despots, but a new abundant energy source would have the potential to eliminate poverty for good.

The Law Of Money

The lack of a space shuttle replacement isn't the only example of man's ingenuity stagnating. Other high-tech projects, notably the retirement of the supersonic plane, Concorde, have similarly faded into obscurity. Man was also on the moon before I was born and hasn't been back since. There is of course a huge factor behind this; money. The current economic system only rewards success where failure is severely punished. While failures are costly unfortunately they are prerequisite to making any discovery.

At the end of the day however there is simply not enough incentive for the companies with resources, unwilling or unable to invest in long-term research. Publicly traded companies have to be conscious of their every move. This is due to the fact that they are orientated toward the short-term concerns of the shareholder value, much to the detriment of long-term viability. They simply cannot justify open-ended speculative research because Wall Street's money is focused on short-term gains, not long-term investment. A classic example of this was Proctor and Gamble's failed 'Olean' project which saw its stock drop from $70 to $15. Indeed, not even reputable research giants can afford such failures. This is a tragedy in itself as scientific progress hinges on discovering such failures.

The Law Of Money

The lack of a space shuttle replacement isn't the only example of man's ingenuity stagnating. Other high-tech projects, notably the retirement of the supersonic plane, Concorde, have similarly faded into obscurity. Man was also on the moon before I was born and hasn't been back since. There is of course a huge factor behind this; money. The current economic system only rewards success where failure is severely punished. While failures are costly unfortunately they are prerequisite to making any discovery.

"I doubt he'd [Magellan] get funding from venture capitalists today. In fact it's hard to imagine most of the visionaries of the past in today's risk-averse environment getting funding" - Gary Kasparov, talking to Palantir Technologies, 2010This raises a wider debate over whether capitalism is either driving or holding progress, given that it is money which such projects ultimately depend, not the decision to undertake them. Capitalism is of course first and foremost self-interested. It will go for what is profitable, which is not necessarily the same as what is beneficial to the advancement of mankind. Smart phones and MP3 players are profitable, but they don't exactly include all areas of science. Certainly, it's good if the existing technology we have works better and improved upon, but arguably what is needed more is a better system which promotes scientific progress in its most broadest sense. It also raises the spectre of whether we are truly making things better, or merely just cheaper.

At the end of the day however there is simply not enough incentive for the companies with resources, unwilling or unable to invest in long-term research. Publicly traded companies have to be conscious of their every move. This is due to the fact that they are orientated toward the short-term concerns of the shareholder value, much to the detriment of long-term viability. They simply cannot justify open-ended speculative research because Wall Street's money is focused on short-term gains, not long-term investment. A classic example of this was Proctor and Gamble's failed 'Olean' project which saw its stock drop from $70 to $15. Indeed, not even reputable research giants can afford such failures. This is a tragedy in itself as scientific progress hinges on discovering such failures.

"You should stop printing money because that insults my intelligence. Using a lot of money to save something beyond rescue - the inefficient and corrupt banking and investment system - instead of investing in real stimulus was a bad decision, long term, mid term, and maybe even short term" - Gary Kasparov, talking to Palantir Technologies (asked what advice he would have for the Obama Administration), 2010We now have more thinkers, scientists, engineers and industrialists alive on this planet, right now, than at any other point in history. In fact, we have more of them now than all the scientists before them throughout Earth's history combined - by several orders of magnitude. The problem is that they are inhibited, mainly by greed. Scientists are not exempt from the monetary system with the law of diminishing returns also applicable to them. More scientists does not automatically double results. Rather, the failure to critically select the best and brightest scientists could well result in spreading resources too thin to they point where they are forced to spend half their time competing for funding instead of doing actual science. Lacking funds, the other half of their time could conceivably be spent twiddling their thumbs doing less expensive research, therefore forsaking working on something with greater scientific return.

The Case For Progress

Of course, this is not the whole story. The problem with looking at this on a linear scale shows the idea that 'big' inventions happened between 1830 and 1960. What you have to remember is that these inventions took decades to reach maturity whereas current inventions now take less time to make an impact thanks to their ancillaries before them. People often overlook the initial struggle to get new technology into production where it can finally benefit the people. Thus, it does not necessarily mean we have reached the pinnacle of development and all that's left to do is 'tweak'.

The way we exchange information has increased exponentially to the point where information technology has helped accelerate overall progress. Agriculture is another area where humans have made huge strides, especially recently. In 1880 around 49% of the entire world's labour force was involved in agriculture. As recently as 1970, the percentage of agriculturists in the developed world was as high as 20%. Now it is only 2.5%. In underdeveloped regions it was something closer to two-thirds, whereas now it is about 43.5%. From a global perspective there is certainly an argument for progress. The worldwide access to smart phones and communications is unprecedented. Just because Western life doesn't appear to have changed all too much, consider the rest of the world which is experiencing swift changes from farm-to-iPhone. An exponential growth in population has also contributed more 'brains', and coupled with global communications can only mark a surge in development. More connected people means more shared knowledge and thus new innovations. Or so the theory goes.

For the first time humanity is at a stage it has never found itself in before. We have to worry far less about survival than in the past, free to work on the more complex problems of the universe. Cancer treatments are better, survival rates are up, as is life expectancy. On space travel it is not all gloom either. Consider the Mars rovers, which wouldn't last the years they currently do with sixties technology. We also have long-term orbiters around Saturn and other worlds. Indeed, there is a permanent human presence in space. There are also holes in the argument that the clear benefits of innovation in the period 1880-1970 are more tangible because of the correlation with falling mortality data. In statistics it is possible to show correlations with almost anything. In reality, falling mortality has had less to do with the introduction of nuclear power, electricity and antibiotics than you might think. The reason for the sharp drop in death rates was more to do with modern plumbing and sewer systems which effectively separated waste from drinking water - a much simpler and more common-sense based implementation than the complexities involved in splitting the atom. Indeed, widespread use of antibiotics only occurred in the mid-twentieth century, after the 'bulk' decrease in mortality had already occurred.

No doubt, the period 1880-1970 saw the world change dramatically. The problems lie in whether the period since has been just as dramatic. Perhaps there is an argument to make that this current generation is so used to change that we don't even notice it. Moore's Law was forwarded in the seventies, and yet technology arguably continues to push forward. Indeed, technological leaps remain a matter of perspective. For example, Einstein merely refined Newton's approach where he was already close to 'reality'. Humanity is constantly refining and drawing closer to the "truth". The leaps may seem smaller, but now they are more numerous.

|

| Click to Enlarge |

Of course, the elephant in the room is the internet. This has been the one true revolutionary breakthrough in the last forty-years, and may well prove to be one of the greatest in mankind's history. There is no understating how much of a revolution it truly is. Further, the World-Wide-Web is not just a single 'point' on the technological timeline. That would be like saying the same for electricity, and ignoring every subsequent electrical invention because it's already covered. The internet itself has created a new landscape in which data lives changing how people work, live and play. Just because it marks a point on the singular line of progress, it doesn't mean that its offspring are 'not new'.

Science-Fiction

For all the advanced manufacturing techniques the industrial revolution brought to the world, one of the biggest impacts it had was that on culture, particularly noticeable with the new genre of science-fiction novels. Science fiction from the 50s and 60s in particular taught us that we should have flying cars and lunar colonies by the end of the twentieth century. There is most definitely a case to be made that the genre sparked imaginations, and perhaps even gave an entire generation an unrealistic hope for the future. Indeed, how should we expect to have advanced if it were not for science fiction?

Futurism and science fiction were game changing products of industrialization. It offered new thought where no longer would religion and philosophy shape mankind's future; technology would usurp these outmoded constructs where science and reason would guide humanity. The reality however is that these depictions of a glorious future have perhaps inflated expectations when you cannot realistically have ever predicted what stage of development we 'should' be at now. There is no way of knowing what now will turn out to be a 'significant' invention. It might seem progress is 'slow' because for the first time we have the ability to document and share every little step we take with the rest of the world. The problem with making statements such as 'technological progress has slowed down' is that it only takes into account a short period of time, negating the need for a more long-term view. Indeed, while the 'scenic impact' of current progress may be lower, it does not mean it has halted altogether. While our current generation might not have seen the advent of the automobile, we did see the PC, which is now far more capable than any pre-1995 sci-fi computer ever imagined.

Conclusions

The answer as to whether technological progress has slowed down varies on how you measure technical progress. Some of it even comes down to how much it matters to you personally. For example, in 1989 major data centres had whole rooms filled with dishwasher-sized gigabyte drive units. A whole room had a total capacity of 600GB. These were multimillion dollar facilities. Now you can walk into a shop, get a 16GB USB drive the size of your thumb while you've got a 1TB harddrive sitting in your personal computer at home. That is almost double the entire capacity of that room from 1989 in the palm of your hand. And all for under £100. On one level that's incredible, but some might easily discard it as the same only smaller.

The difference in these perceptions is what you class a transformative breakthrough technology and a progressive refinement of existing technology. Depending on the view, it can either be interpreted as 'progress' or 'slowdown'. Gary Kasparov, the Russian chess grandmaster, maintains that the last truly "revolutionary technology was the Apple II", released in 1977. As far as he sees it, innovation is slowly grinding to a halt, both in the public and private spheres. Indeed, there's certainly a case to be made for a 'culture of optimization'.

"We are surrounded by gadgets and computers like never before. They are better each time; a little faster, a littler shinier, a little thinner. But it is derivative, incremental, profit margin-forced, consumer-friendly technology - not the kind that pushes the whole world forward economically...I feel we are now living in the era of the slowest technological progress in the past few hundred years" - Gary Kasparov, talking to Palantir Technologies, 2010The question I ultimately ask is 'is it really ok to be satisfied with consumer toys and covet them as shining beacons to humanity's ingenuity?'. Fundamentally we are still using many of the same technologies invented over the last two-hundred-years. Call it a lack of courage or complacency, but to a certain degree, humanity seems to have lost its passion for sweeping changes. We've replaced our drive to innovate by focussing on incremental changes to existing technologies. An example of such a revolution would be true artificial intelligence. Not the type of predictive algorithm found on Amazon's shopping recommendations; but a machine that can handle information with the flexibility of a human mind. As a society' we've been in stasis for the best part of a century when we should really be trying to take civilization to the next level. We once again must seek out new frontiers, go beyond the unknown, and become explorers again. Humanity's future is still unwritten. We certainly have not invented everything, and in fact it is arguable that we haven't even invented anything yet compared to what the future might bring.

0 comments:

Post a Comment